Introducing Prime Intellect Compute: The compute exchange

We're excited to announce that Prime Intellect Compute, our platform for aggregating and orchestrating global GPU resources, is now fully public.

Our mission with our compute platform is to democratize and commoditize instant compute.

Our Masterplan: Commoditizing Compute and Intelligence for Accelerated Progress

- Aggregate and orchestrate global compute (Fully public from today): Our platform brings together a diverse array of GPUs, starting with on-demand, and soon expanding to spot and different durations, and multi-node clusters.

- Develop distributed training frameworks to enable collaborative model development across global, heterogeneous hardware (OpenDiLoCo coming soon)

Today's announcement focuses on the first crucial step of this plan, unlocking the other steps.

Compute: The Currency of the Future

Compute is becoming one of the most critical currencies and potentially the most precious commodity. Unlike traditional markets, the compute market is uniquely elastic, with price dictating usage levels. As compute becomes more affordable and accessible, its applications will expand exponentially, unlocking new use cases from everyday tasks to scientific research.

The Current State of AI Compute Markets

Despite an explosion in demand, the AI compute market remains primitive and fragmented. Nvidia's incentives contribute to this fragmentation, while providers like AMD, Groq and others further broaden the landscape to pick choose from with specialized chips. The market still lacks the efficiency seen in exchanges.

- Fragmented Pricing: H100 GPU costs fluctuate wildly from 18 per hour across providers.

- Underutilization: High-end GPUs often sit idle 20-50%+ of the time.

- Scalability Bottleneck: Large clusters (16-128+ GPUs) are rarely available on-demand or for short durations.

- Missing Market Mechanisms: No standardized spot markets or futures contracts exist.

- Orchestration Challenges: Spot instances and nodes underutilized due to technical barriers.

- Opaque and in-transparent analog markets of phone calls and negotiations.

- Multi-Node Cluster Reliability: A recent publication by RekaAI shows that the reliability of multi-node clusters between providers can vary by up to 100x, presenting significant challenges for large-scale AI training.

These inefficiencies hold back AI progress and waste one of the most valuable commodities.

Prime Intellect — Compute

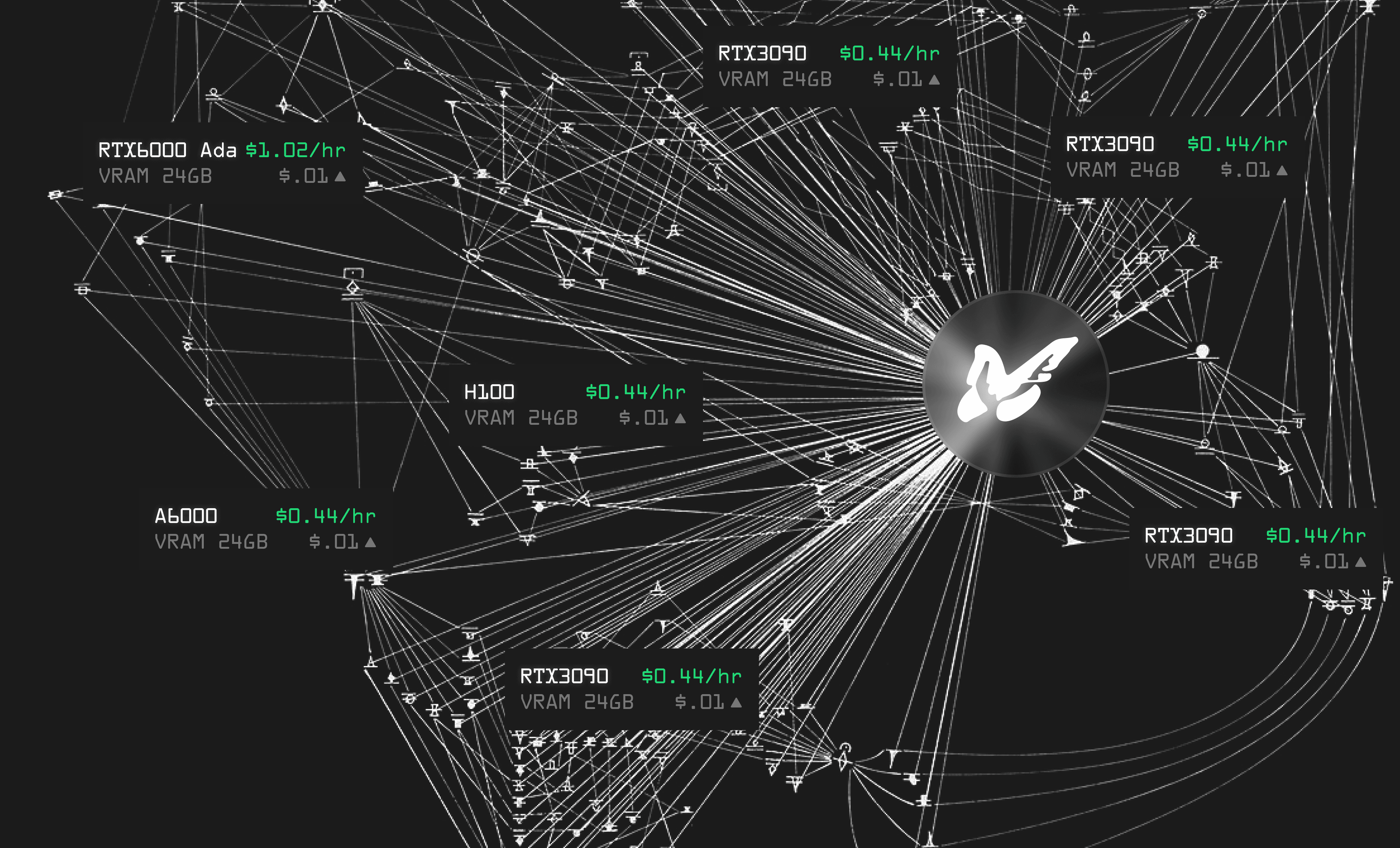

To address these challenges and create an efficient AI compute market, we're launching Prime Intellect Compute, a marketplace that aggregates global compute resources. Our goal is to build a central compute exchange that reduces price discrepancies and improves market efficiencies.

Our vision: A frictionless market where you can instantly secure cost-effective compute, from a single H100 to 512+ GPU clusters, for any duration. Prime Compute is transforming AI compute into a true commodity market.

Current inefficiencies hinder researchers and organizations from developing cutting-edge AI models and prevent compute providers from benefitting from higher utilizations.

We envision a future where you can instantly get the lowest bid on any request, from a single Nvidia H100 on-demand to renting 512 GPUs for two months at the cheapest cost.

Key features include:

- Unified Resource Pool: We aggregate GPU resources from leading providers, offering the most cost-effective options based on chip type, quantity, and duration.

- We’ve already integrated 12 clouds, with many more coming, see provider overview.

- Instant Access: Users can access up to 8 GPUs on-demand immediately, with plans to offer larger multi-node clusters (16-128+ GPUs) in the near future.

- Competitive Pricing: We're bootstrapping supply with our own below-market-price compute, offering H100s at $1.5-4/hr on-demand.

- Utilization Optimization: Our platform aims to significantly increase GPU utilization rates by efficiently matching supply and demand across a global pool of resources.

Our Roadmap

Some of the features we plan to build over the next months. Let us know if there is something missing that you’d like to see:

Multi-Node Clusters (8-1024+ GPUs)

- On-Demand Large-Scale Compute: Access 16-128+ interconnected GPUs instantly, breaking free from long-term contracts. We plan to integrate with tons of multi-node GPU supply across different durations.

- Instant quotes: Get instant prices for various durations, from days to years.

- Reliability Insights: Understand the reliability of different cluster options.

Globally-Distributed Training

We're researching and implementing distributed training techniques to enable efficient multi-node training across clusters. This is crucial for creating a truly liquid market where compute resources are interchangeable. It further enables effectively making use of underutilized GPUs.

- Distributed Framework: Train models across multiple clusters with low communication requirements.

- Fault Tolerance: Ensure seamless operation even if some nodes fail.

- Network Optimization: Minimize impact of varying network latencies.

- Smart Orchestration: Optimize resource allocation and job scheduling.

- Spot Instance Training: Reduce costs by 2-3x using our spot instance optimization.

- Dynamic Scaling: Adjust compute resources in real-time during training. Users will be able to dynamically adjust their total compute resources during training, optimizing for cost and performance.

Platform Improvements

- Cloud and Datacenter Integrations: Connect with all possible cloud providers and datacenters increasing GPU supply. Onboard 20+ compute providers.

- Compute Variety: Integrate spot, on-demand, and long-term options across various chip types.

Upcoming Features

- Allow users to contribute their GPUs directly

- Dynamic Pricing: Efficient price discovery to maximize supplier profits and meet market demand.

- Team Collaboration: Introduce team accounts for better workflow management.

- Provider Reliability Metrics: Transparent performance data for informed decisions.

- Storage Solutions: Implement robust data storage options.

- Advanced Scheduling: Set up jobs, receive notifications, and manage resources.

- Enhanced Security: 2FA and custom SSH key support.

- Containerization: Support for custom Docker images to ensure consistent environments.

- Ray Integration: Leverage Ray for distributed computing tasks.

- Community Features: Referral system and collaborative tools.

Developer Tools

- Prime Intellect API: Programmatic access to our platform's capabilities.

- Command-Line Interface (CLI): For power users and automation.

- Documentation: Comprehensive guides, FAQs, and tutorials.

Find Instant Compute. Train models. Democratize Intelligence.

AI compute needs to be accessible to all, accelerating progress and ensuring democratized access! We invite everyone to join us on this journey:

- Sign up to find instant compute

- H100s starting at 0.87. 4090s at $0.3.

Let's accelerate progress by making compute and machine intelligence more open and accessible for all.

Feel free to upvote us on producthunt