INTELLECT-1 Release: The First Globally Trained 10B Parameter Model

We're excited to release INTELLECT-1, the first 10B parameter language model collaboratively trained across the globe. This represents a 10× scale-up from our previous research and demonstrates that large-scale model training is no longer confined to large corporations but can be achieved through distributed, community-driven approaches. The next step is scaling this even further to frontier model sizes and ultimately open source AGI.

Today, we're releasing:

- Detailed Technical Report

- INTELLECT-1 base model, intermediate checkpoints and post-trained model

- Chat Interface to try it out: chat.primeintellect.ai

- Pre-training Dataset

- Post-training datasets by Arcee AI

- PRIME framework

Scaling Globally-Distributed Training

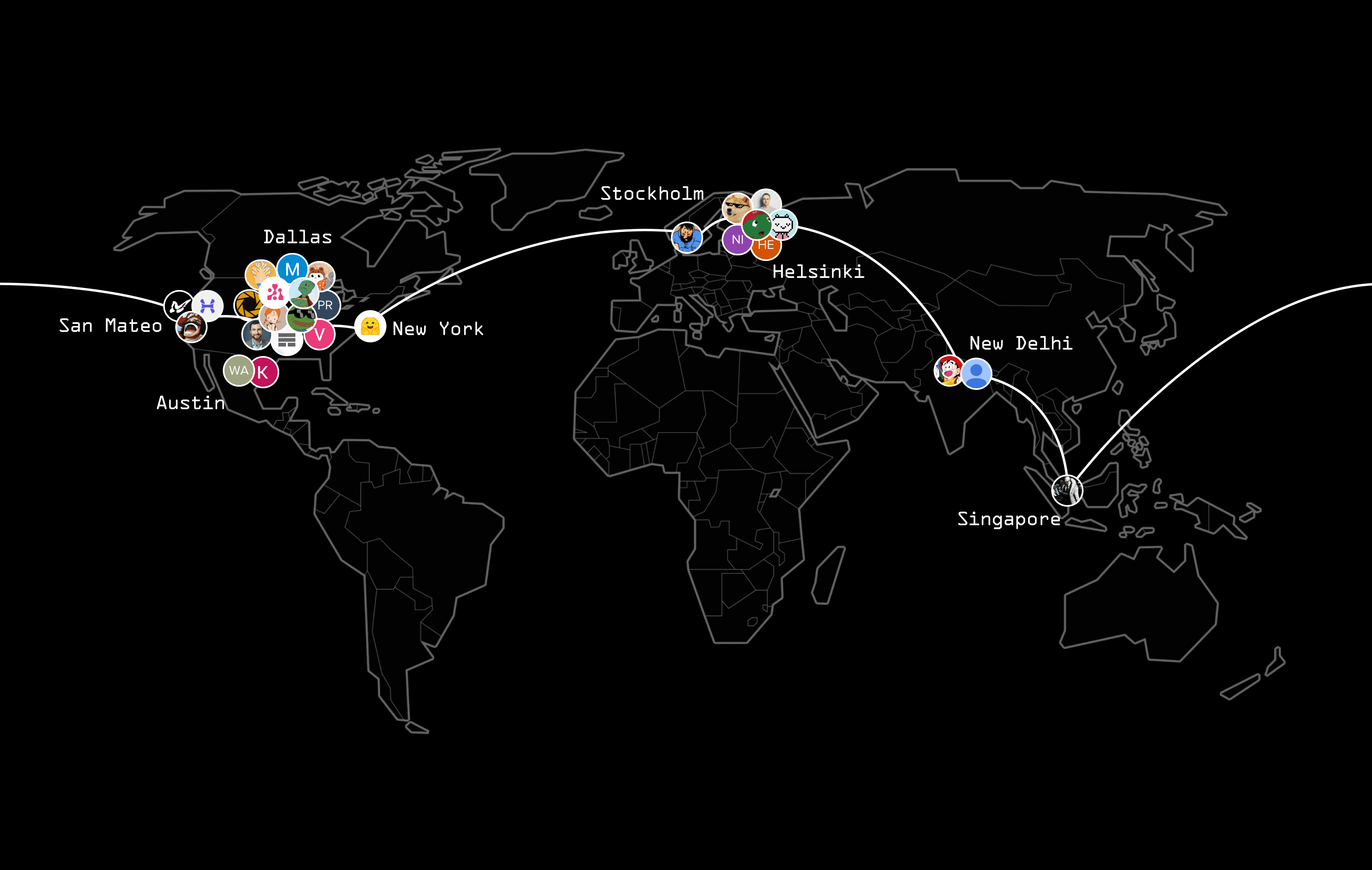

We present the first large-scale experiment collaboratively training a 10 billion parameter model over 1 trillion tokens across five countries and three continents on up to 112 H100 GPUs simultaneously. We achieve an overall compute utilization of 83% across continents and 96% when training exclusively on nodes distributed across the entire United States, introducing minimal overhead compared to centralized training approaches.

Our results show that INTELLECT-1 can maintain training convergence and high compute utilization despite severe bandwidth constraints and node volatility, opening new possibilities for distributed, community-driven training of frontier foundation models.

Technical Progress

Our research and engineering work on PRIME helped us achieve this milestone.

Key innovations in PRIME include the ElasticDeviceMesh, which manages dynamicglobal process groups for fault-tolerant communication across the internet andlocal process groups for communication within a node, live checkpoint recovery,kernels, and a hybrid DiLoCo-FSDP2 implementation.

Using PRIME with DiLoCo and our custom int8 all-reduce, we achieve an overall 400x reduction in communication bandwidth compared to traditional data-parallel training settings while delivering comparable performance at the 10B scale.

Training Details and Datasets

INTELLECT-1 is based on the Llama-3 architecture, comprising:

- 42 layers with 4,096 hidden dim

- 32 attention heads

- 8,192 sequence length

- 128,256 vocab size

The model was trained on a carefully curated 1T token dataset mix (Huggingface Link)

- 55% FineWeb-Edu

- 20% Stack v2

- 10% FineWeb

- 10% DCLM-baseline

- 5% OpenWebMath

Training completed over 42 days using:

- WSD learning rate scheduler

- 7.5e-5 inner learning rate

- Auxiliary max-z-loss for stability

- Nesterov momentum outer optimizer

- Dynamic on-/off-boarding of compute resources with up to 14 nodes

Compute Efficiency

The system achieved great training efficiency across different geographical settings:

- 96% compute utilization training across the entire United States (103s median sync time)

- 85.6% compute utilization for transatlantic training (382s median sync time)

- 83% compute utilization for global distributed training (469s median sync time)

Post-Training

After completing the globally distributed pretraining phase, we applied several post-training techniques in collaboration with Arcee AI to enhance INTELLECT-1s’s capabilities and task-specific performance. Our post-training methodology consisted of three main phases including extensive SFT (16 runs), DPO (8 runs), and strategic model merging using MergeKit.

For more info check out our detailed technical report.

Conclusion and Next Steps: Scaling to the Frontier

The successful training of INTELLECT-1 demonstrates a key technical advancement in enabling a more open and democratized AI ecosystem, with significant implications for the future development and governance of advanced artificial intelligence systems.

Through our open-source PRIME framework, we've established a foundation for distributed AI development that can rival centralized training facilities.

Looking ahead, we envision scaling this approach to frontier model sizes by:

- Expanding our global compute network

- Implementing new economic incentives to drive community participation

- Further optimizing our distributed training architecture for even larger models

This work represents a crucial step toward democratizing AI development and preventing the consolidation of AI capabilities within a few organizations. By open-sourcing INTELLECT-1's model, checkpoints, and training framework, we invite the global AI community to join us in pushing the boundaries of distributed training.

To get involved, visit our GitHub repository or join our Discord community. Together, we can build a more open, collaborative future for AI development.