Distributed Training in the Inference-Time-Compute Paradigm

We have started working on LLM reasoning and have a few updates to share:

- Research preview: By fine-tuning on synthetic reasoning traces, we can provide better policy initializations for online reinforcement learning. We use this insight to train INTELLECT-MATH, a state-of-the-art model for mathematical reasoning (prior to the release of Deepseek-R1) in the 7B parameter regime that reduces the training time required to match the existing state-of-the-art by 10x. Additionally, we release NuminaMath-QwQ-CoT-5M, a dataset containing five million reasoning traces across 860k mathematics questions verified for correctness. All of our code as well as weights and data are open source.

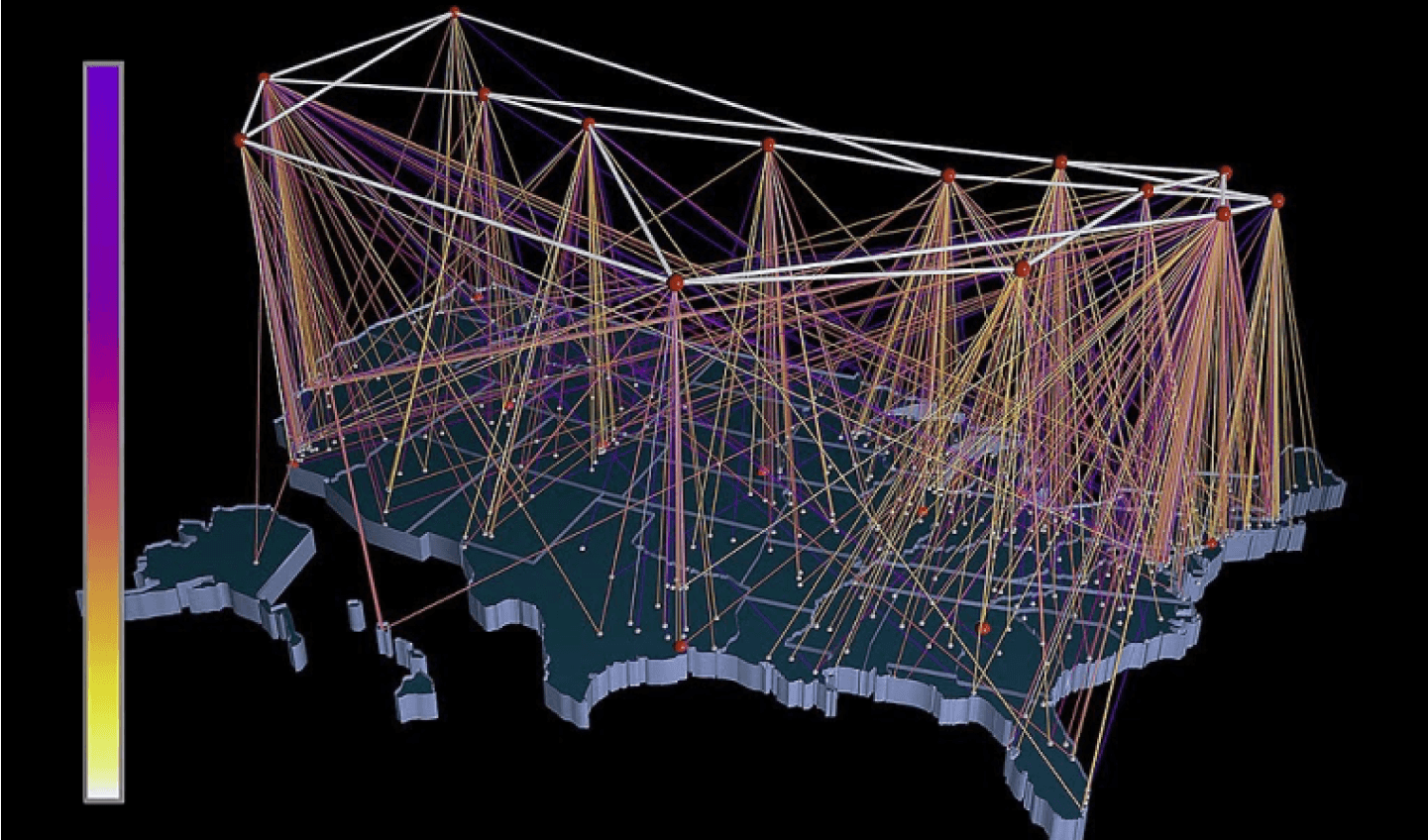

- An Outlook on Distributed Training in the Inference-Compute Paradigm: Huge amounts of forward passes during reinforcement learning will result in lower communication requirements and fundamentally shift compute infrastructure requirements, making globally distributed training the path forward. We explain why and share our research plans for the coming months.

- We are hiring: This year, we will train a fully open reproduction of o3, enabling everyone globally to contribute compute resources permissionlessly. If you want to be part of this, join our research team.

Research Preview: 10x Faster Training for State-of-the-Art Mathematical Reasoning

OpenAI’s o1 and o3 represent a major shift in how language models are trained and used - by spending more time thinking about problems during inference, they can better handle complex tasks like solving math problems or writing code.

Reasoning models like these are trained differently compared to traditional chat models: Given a set of problems, they generate candidate solutions that are assigned rewards based on the solutions’ correctness, which allows the models to learn from their own answers and iteratively self-improve without training on human task demonstrations. This iterative process is called online reinforcement learning (RL).

PRIME-RL, A recent piece of work from Tsinghua University and UIUC, has introduced an online RL algorithm that leverages rewards from implicit process reward models. Using this approach, the authors trained Eurus-2-7B-PRIME, which (prior to the release of Deepseek-R1) substantially outperformed all other 7B parameter models as well as bigger models like GPT-4o on mathematical reasoning benchmarks.

While PRIME-RL and related methods lead to great performance, online reinforcement learning tends to be unstable and quite expensive compared to supervised training: since we have to sample solutions from our model at every training step, the whole training process takes very long.

Better Initializations with Synthetic Reasoning Data

The online RL procedure that led to Eurus-2-7B-PRIME was initialized with Eurus-2-7B-SFT, a model that was fine-tuned on 230k math questions and solutions in a supervised manner. The solutions for this dataset were generated with Llama-3.1-70B-Instruct, with a specific system prompt that asks the model to reason step by step and indicate what action it is currently taking by outputting strings such as “[ASSESS]”, “[ADVANCE]” or “[VERIFY]” as part of its chain of thought.

While this system prompt helps generate data that nudges the model towards structured reasoning, it is clearly possible to generate higher-quality SFT data with the help of existing reasoning models and thus train a better SFT model. We hypothesize that this can be very impactful: by starting RL from a better policy that already imitates desired reasoning behaviors such as self-correction, we won’t have to wait for these behaviors to emerge from reinforcement learning alone, and will thus get to better model performance faster.

To obtain a synthetic fine-tuning dataset, we used QwQ-32B, the best open reasoning model at the time of writing this article. We started from a set of mathematics questions and responses with ground truth solutions obtained from the NuminaMath dataset and consequently used QwQ to generate responses. The responses were then filtered for correctness (by comparing to the ground truth response) and length (as our base model has a context window of 4096) - no further post-processing was applied.

The resulting SFT dataset consisted of 733k questions with corresponding responses. Following the work on Eurus, we fine-tuned the base model Qwen-7B-Math on this dataset and consequently applied PRIME-RL using the exact same setup and RL data as the original work.

Performance

Using the described methodology, we train INTELLECT-MATH, a model that outperforms Eurus-2-7B-PRIME on several mathematical reasoning benchmarks and is the best 7B parameter model for mathematical reasoning (prior to the release of Deepseek-R1). Furthermore, we show that an earlier checkpoint of the model was able to match the performance of Eurus-2-7B-PRIME with 10x less GPU hours (47 RL steps for INTELLECT-MATH vs. 592 for Eurus-2-PRIME).

Compared to Eurus-2-PRIME, INTELLECT-MATH-7B-SFT and INTELLECT-MATH-7B exhibit more typical reasoning behavior that can be seen in models like o1 or Deepseek-R1: they naturally summarize the question at hand, verify their reasoning and “think out loud”, whereas Eurus-2-7B-PRIME has to be specifically instructed to do so in its system prompt. Notably, the main difference between INTELLECT-MATH-7B-SFT and INTELLECT-MATH-7B is that the former is much more verbose and contains too much unnecessary self-questioning, which often leads to it getting stuck in infinite reasoning loops. During reinforcement learning, we saw its response length decrease, which indicates that the RL stage refines the reasoning behavior learned through SFT to avoid pure imitation.

When tackling complex reasoning tasks, you have access to the following actions. Use them as needed to progress through your thought process. [ASSESS] [ADVANCE] [VERIFY] [SIMPLIFY] [SYNTHESIZE] [PIVOT] [OUTPUT] You should strictly follow the format below: [ACTION NAME] # Your action step 1 # Your action step 2 # Your action step 3 ... Next action: [NEXT ACTION NAME]

Solve the following math problem efficiently and clearly. Think carefully and step by step about your response and reason before providing a final response. Conclude your response with: Therefore, the final answer is: *boxedanswer*. I hope it is correct. Where [answer] is just the final number or expression that solves the problem.

Solve the following math problem efficiently and clearly. Think carefully and step by step about your response and reason before providing a final response. Conclude your response with: Therefore, the final answer is: *boxedanswer*. I hope it is correct. Where [answer] is just the final number or expression that solves the problem.

Revisiting our Results After the Release of Deepseek-R1

With the release of Deepseek-R1, we now know much more about how to train a strong reasoning model. For the most part, our results are in line with the findings from yesterday’s paper: The Deepseek team reports that incorporating cold start data for SFT was important for model performance and made R1 a much better model than R1-zero. Furthermore, distillation alone from a strong teacher model without further RL is highly effective.

One result that is different to the findings of the Deepseek team is the effect of reinforcement learning on the length of model responses. Deepseek-R1’s responses became longer as RL training progressed, whereas our RL stage made the model outputs less verbose and shorter on average. Whether this is due to the use PRIME-RL compared to GRPO for reinforcement learning, differences in our SFT data or another reason entirely is something we will have to study.

NuminaMath-QwQ-CoT-5M: A large synthetic reasoning dataset

Motivated by the good results of our approach, we have decided to go beyond the 766k samples from our SFT data and use QwQ-32B to generate a larger open dataset for the research community. NuminaMath-QwQ-CoT-5M contains five million reasoning traces across 860k mathematics questions in NuminaMath that are verified for correctness. Along with our checkpoints, we hope that this data will be helpful for researchers fine-tuning models and working on other aspects of LLM reasoning such as process reward models.

Going forward, we are particularly excited about leveraging globally distributed compute for generating trillion-token-scale synthetic datasets: Models such as Qwen-Coder, WizardLM, Phi-4, and most notably models distilled from Deepseek-R1, were trained on very large synthetic datasets from strong teacher models and have shown that this is extremely effective for enhancing capabilities.

Dzmitry Bahdanau, the inventor of the attention, thinks that "mining verifiable reasoning problems […] in massive numbers" is an important contribution for progress in reasoning - with Deepseek-V3 and Deepseek-R1, we now have open weight models that are competitive with leading closed chat and reasoning models, which means that we are well positioned to leverage our distributed network of GPUs to generate and openly release huge synthetic datasets. Stay tuned for announcements if you want to contribute compute and collaboratively advance open-source AI progress.

An Outlook: Distributed Training in the Inference-Compute Paradigm

With INTELLECT-1, we've demonstrated that large-scale model training is no longer confined to large corporations but can be achieved through distributed, community-driven approaches.

Our research on scaling distributed training to frontier model sizes is making significant progress, and recent developments in inference-time scaling may make this goal even more attainable. We believe that the methods used to train reasoning models are even better suited for distributed approaches than the standard pre-training paradigm.

To quote Semianalysis:

In many cases, these models will run multiple times per backwards pass depending on how much data needs to be pruned or rejected. This leads to an extremely high ratio of forward passes versus backwards passes for post-training whereas in pre-training the ratio is 1 to 1.

This changes the infrastructure requirements heavily for training. For example, having a single large fully connected scale out fabric may not be as necessary as it was in the past. One big positive is that training across geographically distributed datacenters is easier because they can focus purely on data generation and pruning rather than updating the model.

To scale distributed training even further to frontier model sizes, current approaches are constrained by the amount of communication required to synchronize gradients across model replicas. By increasing the ratio of forward passes to backward passes in the inference-compute paradigm, communication becomes less of a bottleneck.

Concretely, to compute a single gradient update, we first perform a rollout phase in which we generate large volumes of samples from our model and assign rewards to them using a reward model or automated verifiers. This rollout phase is time-consuming, leading to far fewer gradient updates than in pre-training.

For example, using PRIME-RL, we sampled four responses for 256 questions at each rollout step to obtain rewards and compute gradients. In this setup, performing a single training step took us more than 10 minutes! Through hyperparameter adjustments (particularly larger rollout phases), it is entirely feasible to design a distributed RL run in a way that is equivalent to a centralized setup.

This means one thing: there’s no inherent disadvantage to a distributed training setup. With access to sufficient datasets, enough crowdsourced GPUs and the same knowledge as frontier labs, it will be possible to train frontier reasoning models in a distributed fashion and make them accessible to everyone.

What’s Next?

We are continuing to work on research with the goal of building open frontier models. Here are some of the problems we are currently tackling to improve model’s reasoning capabilities:

- Scaling Distributed Reinforcement Learning to o3-scale: To replicate o3, we will need to scale reinforcement learning to larger base models and datasets, and train for a much longer time. The compute required to train reasoning models will likely exceed even pre-training requirements, necessitating the pooling of global compute resources. This comes with many interesting design challenges, especially in a low-communication regime. Therefore, we are currently extending our training framework prime to support globally distributed reinforcement learning.

- Trillion-Token Synthetic Datasets: We want to collect more synthetic data to improve model capabilities. To do so, we need to design new recipes to enhance data quality and set up infrastructure that enables data generation in a distributed network of GPUs.

- Training Agents end-to-end: To fully leverage inference-time compute ****for scientific progress, we need to teach reasoning models to use tools such as code interpreters and other software. Reinforcement learning offers a great framework to optimize agents with tool-use end to end.

If this sounds exciting to you, join us. We are a small, talent-dense and highly ambitious team and are looking for engineers and researchers to help us build open source AGI.